What to Do When Your MySQL Table Grows Too Wide

Maintaining a table with hundreds—or even thousands—of columns can quickly become unmanageable. MySQL imposes hard limits on column counts and row sizes, leading to errors like ERROR 1117 (HY000): Too many columns or failed ALTER TABLE operations. Below, we explain key concepts and walk through five strategies to keep your schema healthy and performant.

Key Concepts Explained

- Hard Limit: The absolute maximum number of columns MySQL’s storage engine supports (e.g., 1017 for InnoDB, 2599 for MyISAM).

- Practical Limit: A more realistic ceiling based on metadata, row-size, and engine quirks (often 60–80% of the hard limit).

- Row-Size Limit: MySQL restricts each row to 65,535 bytes. Exceeding this causes table creation or alteration to fail.

- Dynamic vs. Fixed Row Format: Formats like Dynamic allow off-row storage of

TEXT/BLOB, reducing in-row size; Fixed stores full payload in the row. - Vertical Partitioning: Splitting one wide table into multiple narrower tables linked by a foreign key (

id). - EAV (Entity-Attribute-Value): A generic schema model storing attributes as rows (

key,value) rather than columns. - JSON Column: A flexible, single column that holds a JSON object, allowing dynamic fields with optional indexing via generated columns.

1. Optimize Column Types in InnoDB

Goal: Squeeze your schema under InnoDB’s 1017-column hard limit (practical: ~600–1000).

- Use Small Numeric Types: Replace

INTwithSMALLINTorTINYINTfor flags and counters. - Convert TEXT/VARCHAR: If a string is always length ≤10, use

CHAR(10)or even a fixed-lengthBINARYfield. - Combine Booleans: Pack up to 64 boolean flags into a single

SETtype (8 bytes) instead of separateTINYINT(1)columns. - Scale Decimals: Convert

DECIMAL(10,2)into an integer by multiplying by 100 (e.g., store1234for$12.34).

Tip: Run

SELECT JSON_PRETTY(information_schema.COLUMNS) …to review column definitions and size attributes quickly.

Pros: Full ACID, foreign key support, crash-safe.

Cons: Diminishing returns; expect ~800–1000 max columns after optimization.

2. Switch to MyISAM

Goal: Use MyISAM’s 2599-column hard limit (practical: ~1400–1600).

- Engine Swap:

ALTER TABLE your_table ENGINE=MyISAM; - TEXT/BLOB Pointers: Offload large fields into

TEXTso only 4-byte pointers remain in-row. - Monitor: Test adding columns via

ALTER TABLE ADD COLUMNuntil you hit the limit.

Keyword: Dynamic Row Format — MyISAM’s

Dynamicformat stores variable-length payloads off-row, reducing in-row footprint.

Pros: More columns; simple file-based storage.

Cons: No transactions, table locks, manual crash recovery, no foreign keys.

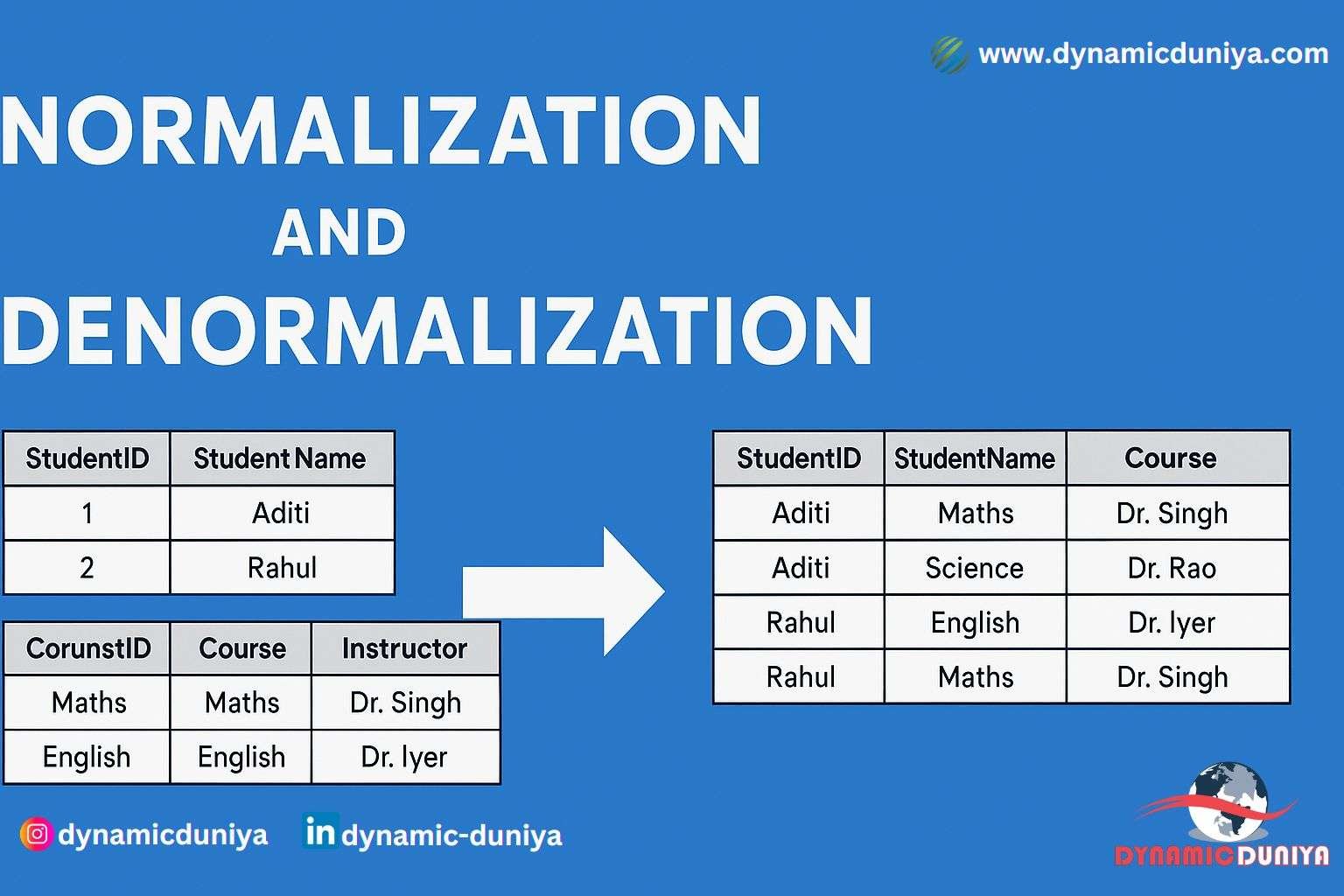

3. Vertical Partitioning: Separate Tables

Goal: Break a monster table into logical chunks.

CREATE TABLE entity_core (

id INT PRIMARY KEY,

core1 … coreN

);

CREATE TABLE entity_ext1 (

id INT PRIMARY KEY,

extA … extZ,

INDEX(id)

);

-- JOIN on id when querying across partsWhy it works: Each subtable stays well under column & row-size limits.

Pros: Better organization, feature-based grouping, fewer column headaches.

Cons: More joins, schema management overhead.

4. Hybrid JSON Column for Sparse Data

Goal: Keep hot (frequently queried) fields as native columns; offload cold or sparsely used fields into one JSON column.

CREATE TABLE records (

id INT PRIMARY KEY,

name VARCHAR(100),

created_at DATETIME,

data JSON,

INDEX((CAST(data->>'$.status' AS CHAR(10))))

);- Keyword: Generated Columns — Define virtual or stored columns that extract JSON keys for indexing.

Pros: Lean core schema, flexible attributes, modern indexing capabilities.

Cons: JSON parsing overhead, complexity in writing queries.

5. EAV (Entity-Attribute-Value)

Goal: Represent each field as a row in a key-value store.

CREATE TABLE entity_attributes (

entity_id INT,

attr_key VARCHAR(64),

attr_value TEXT,

PRIMARY KEY (entity_id, attr_key)

);- Keyword: Sparse Data Model — Ideal when entities have vastly different attribute sets.

Pros: Unlimited attributes, dynamic schema.

Cons: Complex SQL for pivoting, slow attribute-wide scans.

Choosing the Right Approach

| Scenario | Strategy |

|---|---|

| Need ACID + FKs | InnoDB + column optimization |

| Need max columns, plain speed | MyISAM |

| Data is sparse or modular | JSON hybrid |

| Highly dynamic attributes per row | EAV model |

| Moderate columns + relational integrity | Vertical partitioning |

Final Advice: Analyze your query patterns and growth projections. A schema that brute-forces 2500+ columns usually backfires—choose a sustainable model from the start.

Random Blogs

- Variable Assignment in Python

- Top 15 Recommended SEO Tools

- Python Challenging Programming Exercises Part 2

- Python Challenging Programming Exercises Part 3

- Understanding LLMs (Large Language Models): The Ultimate Guide for 2025

- Important Mistakes to Avoid While Advertising on Facebook

- Quantum AI – The Future of AI Powered by Quantum Computing

- AI is Replacing Search Engines: The Future of Online Search

- Understanding HTAP Databases: Bridging Transactions and Analytics

- Extract RGB Color From a Image Using CV2

Prepare for Interview

- JavaScript Interview Questions for 5+ Years Experience

- JavaScript Interview Questions for 2–5 Years Experience

- JavaScript Interview Questions for 1–2 Years Experience

- JavaScript Interview Questions for 0–1 Year Experience

- JavaScript Interview Questions For Fresher

- SQL Interview Questions for 5+ Years Experience

- SQL Interview Questions for 2–5 Years Experience

- SQL Interview Questions for 1–2 Years Experience

- SQL Interview Questions for 0–1 Year Experience

- SQL Interview Questions for Freshers

- Design Patterns in Python

Datasets for Machine Learning

- Awesome-ChatGPT-Prompts

- Amazon Product Reviews Dataset

- Ozone Level Detection Dataset

- Bank Transaction Fraud Detection

- YouTube Trending Video Dataset (updated daily)

- Covid-19 Case Surveillance Public Use Dataset

- US Election 2020

- Forest Fires Dataset

- Mobile Robots Dataset

- Safety Helmet Detection

- All Space Missions from 1957

- OSIC Pulmonary Fibrosis Progression Dataset

- Wine Quality Dataset

- Google Audio Dataset

- Iris flower dataset

- Artificial Characters Dataset

- Bitcoin Heist Ransomware Address Dataset